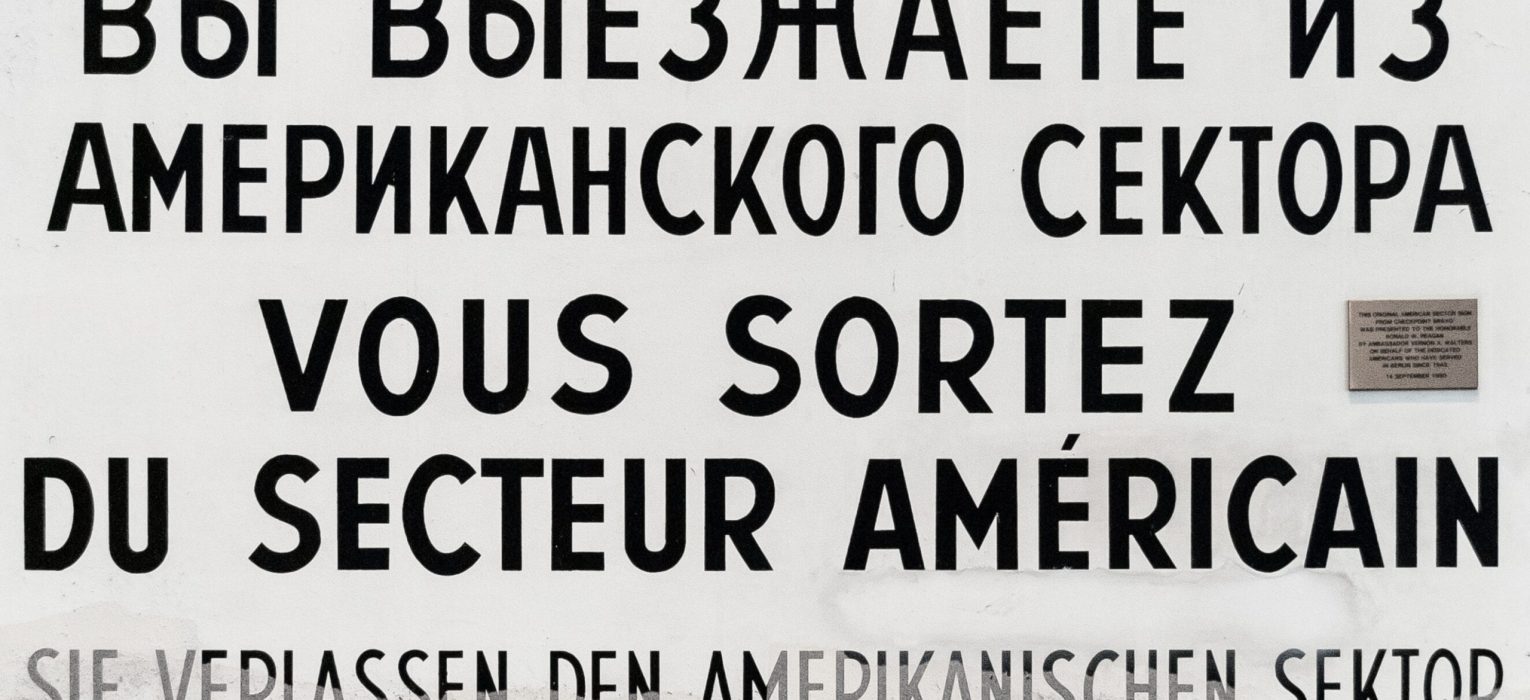

Even after decades of innovation in machine translation, human translators are necessary for post-edits and software improvements so that the machine translations are understandable.

With the rise of technology, the field of translation has changed drastically. It has led to massive cuts in both time for initial translations and costs to do so. That being said, there are still big gaps in the quality of machine translation (MT) that leave the results inaccurate and leave readers lost without the original linguistic context. While the goal is that machines may be able to improve upon themselves and catch and fix their own errors, they are still not advanced enough to fix those mistakes or accurately translate important cultural language and context into target languages without compromising the original meanings.

THE RESEARCH

In “Involving Language Professionals in the Evaluation of Machine Translation,” Maja Popović (2014) from DFKI and her coauthors evaluated six MT systems (Lucy MT, Moses, Google Translate, Trados, Jane, Rbmt) based on three quality measures of translations that were produced by post-translation human translators: overall ranking, error analysis, and levels of post-editing needed. The most relevant of these measures to editing is error identification and, more specifically, the identification of errors in lexical choice, or the choice one (the MT system in this case) makes to use a certain word to indicate a specific meaning. The original and target languages in the study included English, German, French, Spanish, and Czech.

The researchers used MT systems that had been trained to differing degrees on language corpora from two domains: news and technical documentation. Using the MT systems, the researchers then translated 1,000 sentences from news texts and 400 sentences of technical documentation texts. The MT systems then compared the translated sentences to sentences in the two corpora of the original language. The systems ranked the sentences based on how well they matched the original language, with low-ranking sentences then being reviewed by human translators. The human translators reviewed the translations to rank the translations, to categorize errors to see where consistent errors occurred, and to determine which MT systems required the most post-translation editing.

The researchers found that no system had fewer errors than others. This was likely due to the fact that each MT was trained for one of the two domains or neither. Based on the human translators’ categorization of errors, the researchers also found that “the most frequent correction for all systems is the lexical choice.… The main weak point of all systems [is] incorrect lexical choice.” In a second evaluation round where the MTs were all trained on both domains, they all increased their lexical-choice scores significantly, with Trados improving the most: from about 26% correct lexical translation to 68%.

THE IMPLICATIONS

This study provides data that indicates that human intervention is necessary in MT. Without human editors, machine translators’ outputs may be incorrect and inaccurate to the original meaning of a text, especially when it comes to lexical choices.

“The most frequent correction for all systems is the lexical choice…the main weak point of all systems [is] incorrect lexical choice.” —Maja Popović

Popović (2014)

In order to keep valuable information from being misconstrued, translators need to catch the machine-based errors and recalibrate the systems so they are trained adequately on the language domains they are being used for. But even when MTs are calibrated to specific language domains, their accuracy is not perfect, so the best way to catch errors at this point is to use human translators and editors who understand the original language, the target language, and the context of the words.

To learn more about the human contribution to machine translations, read the full article:

Popović, Maja et al. 2014. “Involving Language Professionals in the Evaluation of Machine Translation.” Language Resources and Evaluation Volume 48, Issue 4 (2012): pp. 541-559. https://doi.org/10.1007/s10579-014-9286-z.

—Julia Provost, Editing Research

FEATURE IMAGE BY ETIENNE GIRARDET

Find more research

Read Philipp Koehn’s (2009) article to see how well MTs help aid human translation efforts: “A process study of computer-aided translation.” Machine Translation Volume 23, Issue 4 (November 2009): pp. 241-263. http://dx.doi.org/10.1007/s10590-010-9076-3.

Take a look at Nadeshda K. Riabtseva’s (1987 article to learn how translators and editors go about recording errors to improve MT systems: “Machine Translation Output and Translation Theory.” Computers and Translation Vol. 2, Issue 1 (Jan. 1987): pp. 37-43. http://dx.doi.org/10.1007/BF01540132.